I’ve run a good-sized (30 person) web development shop for eight years now. I’ve seen well over a hundred startups crash and burn from close up, a few stellar successes, and a fair number of lifestyle businesses. I could, and may, write a whole series of blog posts on dumb things to stop doing to your startup, but right now I have one in particular in mind. Stop speculating about the knowable!

To paraphrase Epictetus – “There are things which you can know and things which you cannot know.” I like the way he opens his Enchridion, so I’ll continue paraphrasing, “Of the things which you can know are what color button and what intro text increases conversion, what features your users want and which ones just confuse them, and how much they are willing to pay for your service. Of the things you cannot know are when TechCrunch will pick up your press release, how many competitors you will have in six months, and how much funding you’ll be able to raise.” Regarding the latter matters, you might have goals and hopes, but for those things I would refer you to the original quotation.

In my first years in this business, I really perceived decision like button color, tagline text, and price page design to be a bit of a crap shoot. Later, with a bit of experience under my belt, I started thinking that there were best practices for dealing with decisions like these. I was able to confidently tell my clients things like “design matters to investors” and “there’s a fine and dangerous line between viral marketing and spam” and “it’s too risky to base your business on violating someone else’s patent or terms of service.” When I was uncertain, I could always advocate for following the leaders. Just make your login process like Basecamp/Twitter/Facebook, etc. It’s an imperfect solution, because your users are not their users and they may have different expectations of your service. What’s more, copying “best practices” closes the door to innovation.

But then when I read Steve Blank and then Eric Ries’ books I had one of those face palm/ah-ha moments of being smacked with the obvious. Supposedly I’m a scientist, and somehow the scientific method never occurred to me as a solution to reducing startup risks. D’oh!

Just last week I was stuck in the middle of a debate between a client and one of my managers over the relative merits of asking for a credit card upon signing up for a time-limited free trial of an existing service with over 30,000 users and nearly 100 new signups a day. I tolerated the discussion for all of five minutes and finally lost my cool.

It was after hearing “I don’t sign up for trials that ask for my credit card because I am afraid of forgetting to cancel in time” and “I don’t take a trial seriously if I’m not signed up to pay” that I broke in.

“This is knowable!” I said. “You are describing two normal points of view that can be found among users, but what we don’t know and could know in a day or two*, is which point of view is most common among our users. Once we know that, we can make the decision that maximizes revenues.” There is no point in debating knowable things with inadequate information, especially when new tools make gathering enough statistically-valid data to make the right choice every time easy.

Of course, I’m talking about A/B testing, which is the simple process of creating two versions of a design and showing them tho different sets of users and then tracking what they do. A simple example would be showing half of your users a green signup button and half a red signup button. If 5% of visitors who see the green button click on it and 22% of the visitors who see the red button click on it, then you should probably make the button red rather than green. At least, if you want people to click on it. That’s a very basic example of what can be done with A/B testing. I’ll be writing more about how to design and use tests like this to get real, actionable data to make decisions in future posts. The point is that any debate over button color is probably a waste of time and has a very good chance of costing you a lot of users if the guy who likes green has a louder voice or more seniority.

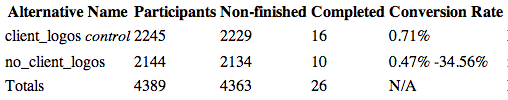

Just hours ago the Kanbanery team debated the value of having client logos on the landing page. We set up a simple A/B test with two versions of the page. Half the visitors saw the old page and half saw the same page with this bit added:

You can see the early results below. It’s too early to tell, but after only a matter of hours it’s already clear that a gap is forming between the conversion rates for the landing page with the logos. By the end of the day we should have enough results to confidently say that we can increase, perhaps double, conversion rates by including the logos on the landing page. It’s not a matter of debate. It’s a knowable fact.

With large amounts of long-term potential revenues hanging on every decision, there’s no value in debating opinion. Get the options on the table, design the tests, run them, and then do the right thing. It is true that it takes more time to test an assumption, and it takes courage to accept when you’re wrong. Time will tell for me, but my initial impression is that it’s better to do half as much and do it right than lurch blindly forward hoping to trip over a pot of gold in the fog.

There’s no guarantee that any startup will succeed. My impression is that most web startups are a one in a thousand shot. Learning from users and testing assumptions might only make that a one in 500 shot, but that’s a whole lot better and it’s just dumb to devote years of your life to shooting blind.

* [addendum: I believe I was mistaken in this advice. I’ve seen A/B tests drift in early days – illustrating that even with large amounts of data, these is variation in populations based on time of day and date. Therefore, I now feel that a test should run at least a week to be certain of the outcome, regardless of how much data is collected.]

Comments on this entry are closed.

Regarding how long you should run a test before coming to a conclusion, see http://www.evanmiller.org/how-not-to-run-an-ab-test.html. In summary, you need to decide ahead of time how many trials you’ll execute before you test for a significant difference or you run the risk of a false result.

Thanks for the link. I agree that it’s important to decide in advance how many trials execute at a minimum, but I think a time frame is important, too. People behave differently on some websites on Monday and on Sunday, so you could get misleading data if you run a test for too short a time period, no matter how many visitors you get.